Introducing the Experimental info() Function

December 16, 2025 by Arve Knudsen

Enriching metrics with metadata labels can be surprisingly tricky in Prometheus, even if you're a PromQL wiz!

The PromQL join query traditionally used for this is inherently quite complex because it has to specify the labels to join on, the info metric to join with, and the labels to enrich with.

The new, still experimental info() function, promises a simpler way, making label enrichment as simple as wrapping your query in a single function call.

In Prometheus 3.0, we introduced the info() function, a powerful new way to enrich your time series with labels from info metrics.

What's special about info() versus the traditional join query technique is that it relieves you from having to specify identifying labels, which info metric(s) to join with, and the ("data" or "non-identifying") labels to enrich with.

Note that "identifying labels" in this particular context refers to the set of labels that identify the info metrics in question, and are shared with associated non-info metrics.

They are the labels you would join on in a Prometheus join query .

Conceptually, they can be compared to foreign keys in relational databases.

Beyond the main functionality, info() also solves a subtle yet critical problem that has plagued join queries for years: The "churn problem" that causes queries to fail when non-identifying info metric labels change, combined with missing staleness marking (as is the case with OTLP ingestion).

Whether you're working with OpenTelemetry resource attributes, Kubernetes labels, or any other metadata, the info() function makes your PromQL queries cleaner, more reliable, and easier to understand.

Visualizing Target Relabeling Rules in Prometheus 3.8.0

December 2, 2025 by Julius Volz (@juliusv)

Prometheus' target relabeling feature allows you to adjust the labels of a discovered target or even drop the target entirely. Relabeling rules, while powerful, can be hard to understand and debug. Your rules have to match the expected labels that your service discovery mechanism returns, and getting any step wrong could label your target incorrectly or accidentally drop it.

To help you figure out where things go wrong (or right), Prometheus 3.8.0 just added a relabeling visualizer to the Prometheus server's web UI that allows you to inspect how each relabeling rule is applied to a discovered target's labels. Let's take a look at how it works!

How Non-Developers Can Contribute to Prometheus

October 31, 2025 by Victoria Nduka (@nwanduka)

My first introduction to the Prometheus project was through the Linux Foundation mentorship program , where I conducted UX research. I remember the anxiety I felt when I was selected as a mentee. I was new not just to Prometheus, but to observability entirely. I worried I was in over my head, working in a heavily developer-focused domain with no development background.

That anxiety turned out to be unfounded. I went on to make meaningful contributions to the project, and I've learned that what I experienced is nearly universal among non-technical contributors to open source.

If you're feeling that same uncertainty, this post is for you. I'll share the challenges you're likely to face (or already face), why your contributions matter, and how to find your place in the Prometheus community.

PromCon is Only One Month Away; See You in Person or via Live Stream!

September 22, 2025 by Bartek Płotka (@bwplotka)

Read more...YACE is joining Prometheus Community

November 19, 2024 by Thomas Peitz (@thomaspeitz)

Yet Another Cloudwatch Exporter (YACE) has officially joined the Prometheus community! This move will make it more accessible to users and open new opportunities for contributors to enhance and maintain the project. There's also a blog post from Cristian Greco's point of view .

The early days

When I first started YACE, I had no idea it would grow to this scale. At the time, I was working with Invision AG (not to be confused with the design app), a company focused on workforce management software. They fully supported me in open-sourcing the tool, and with the help of my teammate Kai Forsthövel , YACE was brought to life.

Our first commit was back in 2018, with one of our primary goals being to make CloudWatch metrics easy to scale and automatically detect what to measure, all while keeping the user experience simple and intuitive. InVision AG was scaling their infrastructure up and down due to machine learning workloads and we needed something that detects new infrastructure easily. This focus on simplicity has remained a core priority. From that point on, YACE began to find its audience.

Announcing Prometheus 3.0

November 14, 2024 by The Prometheus Team

Following the recent release of Prometheus 3.0 beta at PromCon in Berlin, the Prometheus Team is excited to announce the immediate availability of Prometheus Version 3.0!

This latest version marks a significant milestone as it is the first major release in 7 years. Prometheus has come a long way in that time, evolving from a project for early adopters to becoming a standard part of the cloud native monitoring stack. Prometheus 3.0 aims to continue that journey by adding some exciting new features while largely maintaining stability and compatibility with previous versions.

The full 3.0 release adds some new features on top of the beta and also introduces a few additional breaking changes that we will describe in this article.

Prometheus 3.0 Beta Released

September 11, 2024 by The Prometheus Team

The Prometheus Team is proud to announce the availability of Prometheus Version 3.0-beta! You can download it here . As is traditional with a beta release, we do not recommend users install Prometheus 3.0-beta on critical production systems, but we do want everyone to test it out and find bugs.

In general, the only breaking changes are the removal of deprecated feature flags. The Prometheus team worked hard to ensure backwards-compatibility and not to break existing installations, so all of the new features described below build on top of existing functionality. Most users should be able to try Prometheus 3.0 out of the box without any configuration changes.

Our commitment to OpenTelemetry

March 13, 2024 by Goutham Veeramachaneni (@Gouthamve) and Carrie Edwards (@carrieedwards)

The OpenTelemetry project is an Observability framework and toolkit designed to create and manage telemetry data such as traces, metrics, and logs. It is gaining widespread adoption due to its consistent specification between signals and promise to reduce vendor lock-in which is something that we’re excited about.

Looking back at 2023

Over the past few years, we have collaborated with the OpenTelemetry community to make sure that OpenTelemetry and Prometheus support each other bidirectionally. This led to the drafting of the official specification to convert between the two systems, as well as the implementations that allow you to ingest Prometheus metrics into OpenTelemetry Collector and vice-versa.

Since then, we have spent a significant amount of time understanding the challenges faced by OpenTelemetry users when storing their metrics in Prometheus and based on those, explored how we can address them . Some of the changes proposed need careful considerations to avoid breaking either side's operating promises, e.g. supporting both push and pull. At PromCon Berlin 2023, we attempted to summarize our ideas in one of the talks .

At our dev summit in Berlin , we spent the majority of our time discussing these changes and our general stance on OpenTelemetry in depth, and the broad consensus is that we want “to be the default store for OpenTelemetry metrics” !

We’ve formed a core group of developers to lead this initiative, and we are going to release a Prometheus 3.0 in 2024 with OTel support as one of its more important features. Here’s a sneak peek at what's coming in 2024.

The Schedule for the PromCon Europe 2023 is Live

September 1, 2023 by Matthias Loibl (@metalmatze)

PromCon Europe is the eighth conference fully dedicated to the Prometheus monitoring system

Berlin, Germany – September 1, 2023 – The CNCF and the Prometheus team, released the two-day schedule for the single-track PromCon Europe 2023 conference happening in Berlin, Germany from September 28 to September 29, 2023. Attendees will be able to choose from 21 full-length (25min) sessions and up to 20 five-minute lightning talk sessions spanning diverse topics related to Prometheus.

Now in its 8th installment, PromCon brings together Prometheus users and developers from around the world to exchange knowledge, best practices, and experience gained through using Prometheus. The program committee reviewed 66 submissions that will provide a fresh and informative look into the most pressing topics around Prometheus today.

"We are super excited for PromCon to be coming home to Berlin. Prometheus was started in Berlin at Soundcloud in 2012. The first PromCon was hosted in Berlin and in between moved to Munich. This year we're hosting around 300 attendees at Radialsystem in Friedrichshain, Berlin. Berlin has a vibrant Prometheus community and many of the Prometheus team members live in the neighborhood. It is a great opportunity to network and connect with the Prometheus family who are all passionate about systems and service monitoring," said Matthias Loibl, Senior Software Engineer at Polar Signals and Prometheus team member who leads this year's PromCon program committee. "It will be a great event to learn about the latest developments from the Prometheus team itself and connect to some big-scale users of Prometheus up close."

FAQ about Prometheus 2.43 String Labels Optimization

March 21, 2023 by Julien Pivotto (@roidelapluie)

Prometheus 2.43 has just been released, and it brings some exciting features and

enhancements. One of the significant improvements is the stringlabels release,

which uses a new data structure for labels. This blog post will answer some

frequently asked questions about the 2.43 release and the stringlabels

optimizations.

What is the stringlabels release?

The stringlabels release is a Prometheus 2.43 version that uses a new data

structure for labels. It stores all the label/values in a single string,

resulting in a smaller heap size and some speedups in most cases. These

optimizations are not shipped in the default binaries and require compiling

Prometheus using the Go tag stringlabels.

Introducing Prometheus Agent Mode, an Efficient and Cloud-Native Way for Metric Forwarding

November 16, 2021 by Bartlomiej Plotka (@bwplotka)

Bartek Płotka has been a Prometheus Maintainer since 2019 and Principal Software Engineer at Red Hat. Co-author of the CNCF Thanos project. CNCF Ambassador and tech lead for the CNCF TAG Observability. In his free time, he writes a book titled "Efficient Go" with O'Reilly. Opinions are my own!

What I personally love in the Prometheus project, and one of the many reasons why I joined the team, was the laser focus on the project's goals. Prometheus was always about pushing boundaries when it comes to providing pragmatic, reliable, cheap, yet invaluable metric-based monitoring. Prometheus' ultra-stable and robust APIs, query language, and integration protocols (e.g. Remote Write and OpenMetrics ) allowed the Cloud Native Computing Foundation (CNCF) metrics ecosystem to grow on those strong foundations. Amazing things happened as a result:

- We can see community exporters for getting metrics about virtually everything e.g. containers , eBPF , Minecraft server statistics and even plants' health when gardening .

- Most people nowadays expect cloud-native software to have an HTTP/HTTPS

/metricsendpoint that Prometheus can scrape. A concept developed in secret within Google and pioneered globally by the Prometheus project. - The observability paradigm shifted. We see SREs and developers rely heavily on metrics from day one, which improves software resiliency, debuggability, and data-driven decisions!

In the end, we hardly see Kubernetes clusters without Prometheus running there.

Prometheus Conformance Program: First round of results

October 14, 2021 by Richard "RichiH" Hartmann

Today, we're launching the Prometheus Conformance Program with the goal of ensuring interoperability between different projects and vendors in the Prometheus monitoring space. While the legal paperwork still needs to be finalized, we ran tests, and we consider the below our first round of results. As part of this launch Julius Volz updated his PromQL test results .

As a quick reminder: The program is called Prometheus Conformance, software can be compliant to specific tests, which result in a compatibility rating. The nomenclature might seem complex, but it allows us to speak about this topic without using endless word snakes.

On Ransomware Naming

June 10, 2021 by Richard "RichiH" Hartmann

As per Oscar Wilde, imitation is the sincerest form of flattery.

The names "Prometheus" and "Thanos" have recently been taken up by a ransomware group . There's not much we can do about that except to inform you that this is happening. There's not much you can do either, except be aware that this is happening.

While we do NOT have reason to believe that this group will try to trick anyone into downloading fake binaries of our projects, we still recommend following common supply chain & security practices. When deploying software, do it through one of those mechanisms:

Prometheus Conformance Program: Remote Write Compliance Test Results

May 5, 2021 by Richard "RichiH" Hartmann

As announced by CNCF and by ourselves, we're starting a Prometheus conformance program.

To give everyone an overview of where the ecosystem is before running tests officially, we wanted to show off the newest addition to our happy little bunch of test suites: The Prometheus Remote Write compliance test suite tests the sender part of the Remote Write protocol against our specification .

During Monday's PromCon , Tom Wilkie presented the test results from the time of recording a few weeks ago. In the live section, he already had an update . Two days later we have two more updates: The addition of the observability pipeline tool Vector , as well as new versions of existing systems .

Introducing the Prometheus Conformance Program

May 3, 2021 by Richard "RichiH" Hartmann

Prometheus is the standard for metric monitoring in the cloud native space and beyond. To ensure interoperability, to protect users from suprises, and to enable more parallel innovation, the Prometheus project is introducing the Prometheus Conformance Program with the help of CNCF to certify component compliance and Prometheus compatibility.

The CNCF Governing Board is expected to formally review and approve the program during their next meeting. We invite the wider community to help improve our tests in this ramp-up phase.

With the help of our extensive and expanding test suite , projects and vendors can determine the compliance to our specifications and compatibility within the Prometheus ecosystem.

Introducing the '@' Modifier

February 18, 2021 by Ganesh Vernekar

Have you ever selected the top 10 time series for something, but instead of 10 you got 100? If yes, this one is for you. Let me walk you through what the underlying problem is and how I fixed it.

Currently, the topk() query only makes sense as an instant query where you get exactly k results, but when you run it as a range query, you can get much more than k results since every step is evaluated independently. This @ modifier lets you fix the ranking for all the steps in a range query.

In Prometheus v2.25.0, we have introduced a new PromQL modifier @. Similar to how offset modifier lets you offset the evaluation of vector selector, range vector selector, and subqueries by a fixed duration relative to the evaluation time, the @ modifier lets you fix the evaluation for those selectors irrespective of the query evaluation time. The credits for this syntax goes to Björn Rabenstein .

<vector-selector> @ <timestamp>

<range-vector-selector> @ <timestamp>

<subquery> @ <timestamp>

The <timestamp> is a unix timestamp and described with a float literal.

Introducing Feature Flags

February 17, 2021 by Ganesh Vernekar

We have always made hard promises around stability and breaking changes following the SemVer model. That will remain to be the case.

As we want to be bolder in experimentation, we are planning to use feature flags more.

Starting with v2.25.0, we have introduced a new section called disabled features which have the features hidden behind the --enable-feature flag. You can expect more and more features getting added to this section in the future releases.

The features in this list are considered experimental and comes with following considerations as long as they are still behind --enable-feature:

- API specs may change if the feature has any API (web API, code interfaces, etc.).

- The behavior of the feature may change.

- They may break some assumption that you might have had about Prometheus.

- For example the assumption that a query does not look ahead of the evaluation time for samples, which will be broken by

@modifier and negative offset.

- For example the assumption that a query does not look ahead of the evaluation time for samples, which will be broken by

- They may be unstable but we will try to keep them stable, of course.

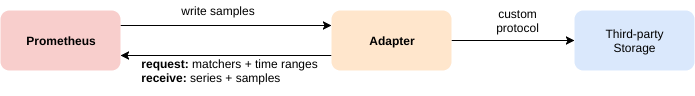

Remote Read Meets Streaming

October 10, 2019 by Bartlomiej Plotka (@bwplotka)

The new Prometheus version 2.13.0 is available and as always, it includes many fixes and improvements. You can read what's changed here . However, there is one feature that some projects and users were waiting for: chunked, streamed version of remote read API .

In this article I would like to present a deep dive of what we changed in the remote protocol, why it was changed and how to use it effectively.

Remote APIs

Since version 1.x, Prometheus has the ability to interact directly with its storage using the remote API.

This API allows 3rd party systems to interact with metrics data through two methods:

- Write - receive samples pushed by Prometheus

- Read - pull samples from Prometheus

Both methods are using HTTP with messages encoded with protobufs . The request and response for both methods are compressed using snappy .

Interview with ForgeRock

June 18, 2019 by Simon Pasquier

Continuing our series of interviews with users of Prometheus, Ludovic Poitou from ForgeRock talks about their monitoring journey.

Can you tell us about yourself and what ForgeRock does?

I’m Ludovic Poitou, Director of Product Management at ForgeRock , based near Grenoble, France. ForgeRock is an international identity and access management software company with more than 500 employees, founded in Norway in 2010, now headquartered in San Francisco, USA. We provide solutions to secure every online interaction with customers, employees, devices and things. We have more than 800 customers from finance companies to government services.

What was your pre-Prometheus monitoring experience?

The ForgeRock Identity Platform has always offered monitoring interfaces. But the platform is composed of 4 main products, each of them had different options. For example, the Directory Services product offered monitoring information through SNMP, JMX or LDAP, or even a RESTful API over HTTP in the most recent versions. Other products only had REST or JMX. As a result, monitoring the whole platform was complex and required tools that were able to integrate those protocols.

Interview with Hostinger

February 6, 2019 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Donatas Abraitis from Hostinger talks about their monitoring journey.

Can you tell us about yourself and what Hostinger does?

I’m Donatas Abraitis, a systems engineer at Hostinger . Hostinger is a hosting company as the name implies. We have around 30 million clients since 2004 including the 000webhost.com project - free web hosting provider.

What was your pre-Prometheus monitoring experience?

When Hostinger was quite a small company, only Nagios, Cacti, and Ganglia existed at that time in the market as open source monitoring tools. This is like telling young people what a floppy drive is, but Nagios and Cacti are still in development cycle today.

Even though no automation tools existed. Bash + Perl did the job. If you want to scale your team and yourself, automation should never be ignored. No automation - more human manual work involved.

At that time there were around 150 physical servers. To compare, till this day we have around 2000 servers including VMs and physical boxes.

For networking gear, SNMP is still widely used. With the rise of "white box" switches SNMP becomes less necessary, as regular tools can be installed.

Instead of SNMP, you can run node_exporter, or any other exporter inside the switch to expose whatever metrics you need with the human-readable format. Beautiful is better than ugly, right?

We use CumulusOS which is in our case mostly x86 thus there is absolutely no problem to run any kind of Linux stuff.

Subquery Support

January 28, 2019 by Ganesh Vernekar

Introduction

As the title suggests, a subquery is a part of a query, and allows you to do a range query within a query, which was not possible before. It has been a long-standing feature request: prometheus/prometheus/1227 .

The pull request for subquery support was recently merged into Prometheus and will be available in Prometheus 2.7. Let’s learn more about it below.

Motivation

Sometimes, there are cases when you want to spot a problem using rate with lower resolution/range (e.g. 5m) while aggregating this data for higher range (e.g. max_over_time for 1h).

Previously, the above was not possible for a single PromQL query. If you wanted to have a range selection on a query for your alerting rules or graphing, it would require you to have a recording rule based on that query, and perform range selection on the metrics created by the recording rules. Example: max_over_time(rate(my_counter_total[5m])[1h]).

When you want some quick results on data spanning days or weeks, it can be quite a bit of a wait until you have enough data in your recording rules before it can be used. Forgetting to add recording rules can be frustrating. And it would be tedious to create a recording rule for each step of a query.

With subquery support, all the waiting and frustration is taken care of.

Interview with Presslabs

August 23, 2018 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Mile Rosu from Presslabs talks about their monitoring journey.

Can you tell us about yourself and what Presslabs does?

Presslabs is a high-performance managed WordPress hosting platform targeted at publishers, Enterprise brands and digital agencies which seek to offer a seamless experience to their website visitors, 100% of the time.

Recently, we have developed an innovative component to our core product—WordPress Business Intelligence. Users can now get real—time, actionable data in a comprehensive dashboard to support a short issue-to-deployment process and continuous improvement of their sites.

We support the seamless delivery of up to 2 billion pageviews per month, on a fleet of 100 machines entirely dedicated to managed WordPress hosting for demanding customers.

We’re currently on our mission to bring the best experience to WordPress publishers around the world. In this journey, Kubernetes facilitates our route to an upcoming standard in high availability WordPress hosting infrastructure.

Prometheus Graduates Within CNCF

August 9, 2018 by Richard Hartmann

We are happy to announce that as of today, Prometheus graduates within the CNCF .

Prometheus is the second project ever to make it to this tier. By graduating Prometheus, CNCF shows that it's confident in our code and feature velocity, our maturity and stability, and our governance and community processes. This also acts as an external verification of quality for anyone in internal discussions around choice of monitoring tool.

Since reaching incubation level, a lot of things happened; some of which stand out:

- We completely rewrote our storage back-end to support high churn in services

- We had a large push towards stability, especially with 2.3.2

- We started a documentation push with a special focus on making Prometheus adoption and joining the community easier

Implementing Custom Service Discovery

July 5, 2018 by Callum Styan

Prometheus contains built in integrations for many service discovery (SD) systems such as Consul, Kubernetes, and public cloud providers such as Azure. However, we can’t provide integration implementations for every service discovery option out there. The Prometheus team is already stretched thin supporting the current set of SD integrations, so maintaining an integration for every possible SD option isn’t feasible. In many cases the current SD implementations have been contributed by people outside the team and then not maintained or tested well. We want to commit to only providing direct integration with service discovery mechanisms that we know we can maintain, and that work as intended. For this reason, there is currently a moratorium on new SD integrations.

However, we know there is still a desire to be able to integrate with other SD mechanisms, such as Docker Swarm. Recently a small code change plus an example was committed to the documentation directory within the Prometheus repository for implementing a custom service discovery integration without having to merge it into the main Prometheus binary. The code change allows us to make use of the internal Discovery Manager code to write another executable that interacts with a new SD mechanism and outputs a file that is compatible with Prometheus' file_sd. By co-locating Prometheus and our new executable we can configure Prometheus to read the file_sd-compatible output of our executable, and therefore scrape targets from that service discovery mechanism. In the future this will enable us to move SD integrations out of the main Prometheus binary, as well as to move stable SD integrations that make use of the adapter into the Prometheus discovery package.

Integrations using file_sd, such as those that are implemented with the adapter code, are listed here.

Let’s take a look at the example code.

Interview with Datawire

March 16, 2018 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Richard Li from Datawire talks about how they transitioned to Prometheus.

Can you tell us about yourself and what Datawire does?

At Datawire, we make open source tools that help developers code faster on Kubernetes. Our projects include Telepresence , for local development of Kubernetes services; Ambassador , a Kubernetes-native API Gateway built on the Envoy Proxy ; and Forge , a build/deployment system.

We run a number of mission critical cloud services in Kubernetes in AWS to support our open source efforts. These services support use cases such as dynamically provisioning dozens of Kubernetes clusters a day, which are then used by our automated test infrastructure.

What was your pre-Prometheus monitoring experience?

We used AWS CloudWatch. This was easy to set up, but we found that as we adopted a more distributed development model (microservices), we wanted more flexibility and control. For example, we wanted each team to be able to customize their monitoring on an as-needed basis, without requiring operational help.

Interview with Scalefastr

February 8, 2018 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Kevin Burton from Scalefastr talks about how they are using Prometheus.

Can you tell us about yourself and what Scalefastr does?

My name is Kevin Burton and I’m the CEO of Scalefastr . My background is in distributed systems and I’ve previously ran Datastreamer, a company that built a petabyte scale distributed social media crawler and search engine.

At Datastreamer we ran into scalability issues regarding our infrastructure and built out a high performance cluster based on Debian, Elasticsearch, Cassandra, and Kubernetes.

We found that many of our customers were also struggling with their infrastructure and I was amazed at how much they were paying for hosting large amounts of content on AWS and Google Cloud.

We continually evaluated what it costs to run in the cloud and for us our hosting costs would have been about 5-10x what we currently pay.

We made the decision to launch a new cloud platform based on Open Source and cloud native technologies like Kubernetes, Prometheus, Elasticsearch, Cassandra, Grafana, Etcd, etc.

We’re currently hosting a few customers in the petabyte scale and are soft launching our new platform this month.

Prometheus at CloudNativeCon 2017

November 29, 2017 by Tom Wilkie on behalf of the Prometheus team

Wednesday 6th December is Prometheus Day at CloudNativeCon Austin, and we’ve got a fantastic lineup of talks and events for you. Go to the Prometheus Salon for hands on advice on how best to monitor Kubernetes, attend a series of talks on various aspects of Prometheus and then hang out with some of the Prometheus developers at the CNCF booth, all followed by the Prometheus Happy Hour. Read on for more details...

Announcing Prometheus 2.0

November 8, 2017 by Fabian Reinartz on behalf of the Prometheus team

Nearly one and a half years ago, we released Prometheus 1.0 into the wild. The release marked a significant milestone for the project. We had reached a broad set of features that make up Prometheus' simple yet extremely powerful monitoring philosophy.

Since then we added and improved on various service discovery integrations, extended PromQL, and experimented with a first iteration on remote APIs to enable pluggable long-term storage solutions.

But what else has changed to merit a new major release?

PromCon 2017 Recap

September 4, 2017 by Julius Volz

What happened

Two weeks ago, Prometheus users and developers from all over the world came together in Munich for PromCon 2017 , the second conference around the Prometheus monitoring system. The purpose of this event was to exchange knowledge and best practices and build professional connections around monitoring with Prometheus. Google's Munich office offered us a much larger space this year, which allowed us to grow from 80 to 220 attendees while still selling out!

Take a look at the recap video to get an impression of the event:

Prometheus 2.0 Alpha.3 with New Rule Format

June 22, 2017 by Goutham Veeramachaneni

Today we release the third alpha version of Prometheus 2.0. Aside from a variety of bug fixes in the new storage layer, it contains a few planned breaking changes.

Flag Changes

First, we moved to a new flag library, which uses the more common double-dash -- prefix for flags instead of the single dash Prometheus used so far. Deployments have to be adapted accordingly.

Additionally, some flags were removed with this alpha. The full list since Prometheus 1.0.0 is:

web.telemetry-path- All

storage.remote.*flags - All

storage.local.*flags query.staleness-deltaalertmanager.url

Interview with L’Atelier Animation

June 14, 2017 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Philippe Panaite and Barthelemy Stevens from L’Atelier Animation talk about how they switched their animation studio from a mix of Nagios, Graphite and InfluxDB to Prometheus.

Can you tell us about yourself and what L’Atelier Animation does?

L’Atelier Animation is a 3D animation studio based in the beautiful city of Montreal Canada. Our first feature film "Ballerina" (also known as "Leap") was released worldwide in 2017, US release is expected later this year.

We’re currently hard at work on an animated TV series and on our second feature film. Our infrastructure consists of around 300 render blades, 150 workstations and twenty various servers. With the exception of a couple of Macs, everything runs on Linux (CentOS ) and not a single Windows machine.

Interview with iAdvize

May 17, 2017 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Laurent COMMARIEU from iAdvize talks about how they replaced their legacy Nagios and Centreon monitoring with Prometheus.

Can you tell us about iAdvize does?

I am Laurent COMMARIEU, a system engineer at iAdvize. I work within the 60 person R&D department in a team of 5 system engineers. Our job is mainly to ensure that applications, services and the underlying system are up and running. We are working with developers to ensure the easiest path for their code to production, and provide the necessary feedback at every step. That’s where monitoring is important.

iAdvize is a full stack conversational commerce platform. We provide an easy way for a brand to centrally interact with their customers, no matter the communication channel (chat, call, video, Facebook Pages, Facebook Messenger, Twitter, Instagram, WhatsApp, SMS, etc...). Our customers work in ecommerce, banks, travel, fashion, etc. in 40 countries . We are an international company of 200 employees with offices in France, UK, Germany, Spain and Italy. We raised $16 Million in 2015.

Sneak Peak of Prometheus 2.0

April 10, 2017 by Fabian Reinartz

In July 2016 Prometheus reached a big milestone with its 1.0 release. Since then, plenty of new features like new service discovery integrations and our experimental remote APIs have been added. We also realized that new developments in the infrastructure space, in particular Kubernetes , allowed monitored environments to become significantly more dynamic. Unsurprisingly, this also brings new challenges to Prometheus and we identified performance bottlenecks in its storage layer.

Over the past few months we have been designing and implementing a new storage concept that addresses those bottlenecks and shows considerable performance improvements overall. It also paves the way to add features such as hot backups.

The changes are so fundamental that it will trigger a new major release: Prometheus 2.0. Important features and changes beyond the storage are planned before its stable release. However, today we are releasing an early alpha of Prometheus 2.0 to kick off the stabilization process of the new storage.

Interview with Europace

April 6, 2017 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Tobias Gesellchen from Europace talks about how they discovered Prometheus.

Can you tell us about Europace does?

Europace AG develops and operates the web-based EUROPACE financial marketplace, which is Germany’s largest platform for mortgages, building finance products and personal loans. A fully integrated system links about 400 partners – banks, insurers and financial product distributors. Several thousand users execute some 35,000 transactions worth a total of up to €4 billion on EUROPACE every month. Our engineers regularly blog at http://tech.europace.de/ and @EuropaceTech .

Interview with Weaveworks

February 20, 2017 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Tom Wilkie from Weaveworks talks about how they choose Prometheus and are now building on it.

Can you tell us about Weaveworks?

Weaveworks offers Weave Cloud , a service which "operationalizes" microservices through a combination of open source projects and software as a service.

Weave Cloud consists of:

- Visualisation with Weave Scope

- Continuous Deployment with Weave Flux

- Networking with Weave Net , the container SDN

- Monitoring with Weave Cortex , our open source, distributed Prometheus-as-a-Service.

You can try Weave Cloud free for 60 days . For the latest on our products check out our blog , Twitter , or Slack (invite ).

Interview with Canonical

November 16, 2016 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Canonical talks about how they are transitioning to Prometheus.

Can you tell us about yourself and what Canonical does?

Canonical is probably best known as the company that sponsors Ubuntu Linux. We also produce or contribute to a number of other open-source projects including MAAS, Juju, and OpenStack, and provide commercial support for these products. Ubuntu powers the majority of OpenStack deployments, with 55% of production clouds and 58% of large cloud deployments .

My group, BootStack, is our fully managed private cloud service. We build and operate OpenStack clouds for Canonical customers.

Interview with JustWatch

October 12, 2016 by Brian Brazil

Continuing our series of interviews with users of Prometheus, JustWatch talks about how they established their monitoring.

Can you tell us about yourself and what JustWatch does?

For consumers, JustWatch is a streaming search engine that helps to find out where to watch movies and TV shows legally online and in theaters. You can search movie content across all major streaming providers like Netflix, HBO, Amazon Video, iTunes, Google Play, and many others in 17 countries.

For our clients like movie studios or Video on Demand providers, we are an international movie marketing company that collects anonymized data about purchase behavior and movie taste of fans worldwide from our consumer apps. We help studios to advertise their content to the right audience and make digital video advertising a lot more efficient in minimizing waste coverage.

Interview with Compose

September 21, 2016 by Brian Brazil

Continuing our series of interviews with users of Prometheus, Compose talks about their monitoring journey from Graphite and InfluxDB to Prometheus.

Can you tell us about yourself and what Compose does?

Compose delivers production-ready database clusters as a service to developers around the world. An app developer can come to us and in a few clicks have a multi-host, highly available, automatically backed up and secure database ready in minutes. Those database deployments then autoscale up as demand increases so a developer can spend their time on building their great apps, not on running their database.

We have tens of clusters of hosts across at least two regions in each of AWS, Google Cloud Platform and SoftLayer. Each cluster spans availability zones where supported and is home to around 1000 highly-available database deployments in their own private networks. More regions and providers are in the works.

Interview with DigitalOcean

September 14, 2016 by Brian Brazil

Next in our series of interviews with users of Prometheus, DigitalOcean talks about how they use Prometheus. Carlos Amedee also talked about the social aspects of the rollout at PromCon 2016.

Can you tell us about yourself and what DigitalOcean does?

My name is Ian Hansen and I work on the platform metrics team. DigitalOcean provides simple cloud computing. To date, we’ve created 20 million Droplets (SSD cloud servers) across 13 regions. We also recently released a new Block Storage product.

Interview with ShuttleCloud

September 7, 2016 by Brian Brazil

Continuing our series of interviews with users of Prometheus, ShuttleCloud talks about how they began using Prometheus. Ignacio from ShuttleCloud also explained how Prometheus Is Good for Your Small Startup at PromCon 2016.

What does ShuttleCloud do?

ShuttleCloud is the world’s most scalable email and contacts data importing system. We help some of the leading email and address book providers, including Google and Comcast, increase user growth and engagement by automating the switching experience through data import.

By integrating our API into their offerings, our customers allow their users to easily migrate their email and contacts from one participating provider to another, reducing the friction users face when switching to a new provider. The 24/7 email providers supported include all major US internet service providers: Comcast, Time Warner Cable, AT&T, Verizon, and more.

By offering end users a simple path for migrating their emails (while keeping complete control over the import tool’s UI), our customers dramatically improve user activation and onboarding.

PromCon 2016 - It's a wrap!

September 4, 2016 by Julius Volz

What happened

Last week, eighty Prometheus users and developers from around the world came together for two days in Berlin for the first-ever conference about the Prometheus monitoring system: PromCon 2016 . The goal of this conference was to exchange knowledge, best practices, and experience gained using Prometheus. We also wanted to grow the community and help people build professional connections around service monitoring. Here are some impressions from the first morning:

Pull doesn't scale - or does it?

July 23, 2016 by Julius Volz

Let's talk about a particularly persistent myth. Whenever there is a discussion about monitoring systems and Prometheus's pull-based metrics collection approach comes up, someone inevitably chimes in about how a pull-based approach just “fundamentally doesn't scale”. The given reasons are often vague or only apply to systems that are fundamentally different from Prometheus. In fact, having worked with pull-based monitoring at the largest scales, this claim runs counter to our own operational experience.

We already have an FAQ entry about why Prometheus chooses pull over push, but it does not focus specifically on scaling aspects. Let's have a closer look at the usual misconceptions around this claim and analyze whether and how they would apply to Prometheus.

Prometheus reaches 1.0

July 18, 2016 by Fabian Reinartz on behalf of the Prometheus team

In January, we published a blog post on Prometheus’s first year of public existence, summarizing what has been an amazing journey for us, and hopefully an innovative and useful monitoring solution for you. Since then, Prometheus has also joined the Cloud Native Computing Foundation, where we are in good company, as the second charter project after Kubernetes .

Our recent work has focused on delivering a stable API and user interface, marked by version 1.0 of Prometheus. We’re thrilled to announce that we’ve reached this goal, and Prometheus 1.0 is available today .

What does 1.0 mean for you?

If you have been using Prometheus for a while, you may have noticed that the rate and impact of breaking changes significantly decreased over the past year. In the same spirit, reaching 1.0 means that subsequent 1.x releases will remain API stable. Upgrades won’t break programs built atop the Prometheus API, and updates won’t require storage re-initialization or deployment changes. Custom dashboards and alerts will remain intact across 1.x version updates as well. We’re confident Prometheus 1.0 is a solid monitoring solution. Now that the Prometheus server has reached a stable API state, other modules will follow it to their own stable version 1.0 releases over time.

Prometheus to Join the Cloud Native Computing Foundation

May 9, 2016 by Julius Volz on behalf of the Prometheus core developers

Since the inception of Prometheus, we have been looking for a sustainable governance model for the project that is independent of any single company. Recently, we have been in discussions with the newly formed Cloud Native Computing Foundation (CNCF), which is backed by Google, CoreOS, Docker, Weaveworks, Mesosphere, and other leading infrastructure companies .

Today, we are excited to announce that the CNCF's Technical Oversight Committee voted unanimously to accept Prometheus as a second hosted project after Kubernetes! You can find more information about these plans in the official press release by the CNCF .

When (not) to use varbit chunks

May 8, 2016 by Björn “Beorn” Rabenstein

The embedded time series database (TSDB) of the Prometheus server organizes the

raw sample data of each time series in chunks of constant 1024 bytes size. In

addition to the raw sample data, a chunk contains some meta-data, which allows

the selection of a different encoding for each chunk. The most fundamental

distinction is the encoding version. You select the version for newly created

chunks via the command line flag -storage.local.chunk-encoding-version. Up to

now, there were only two supported versions: 0 for the original delta encoding,

and 1 for the improved double-delta encoding. With release

0.18.0 , we

added version 2, which is another variety of double-delta encoding. We call it

varbit encoding because it involves a variable bit-width per sample within

the chunk. While version 1 is superior to version 0 in almost every aspect,

there is a real trade-off between version 1 and 2. This blog post will help you

to make that decision. Version 1 remains the default encoding, so if you want

to try out version 2 after reading this article, you have to select it

explicitly via the command line flag. There is no harm in switching back and

forth, but note that existing chunks will not change their encoding version

once they have been created. However, these chunks will gradually be phased out

according to the configured retention time and will thus be replaced by chunks

with the encoding specified in the command-line flag.

Interview with ShowMax

May 1, 2016 by Brian Brazil

This is the second in a series of interviews with users of Prometheus, allowing them to share their experiences of evaluating and using Prometheus.

Can you tell us about yourself and what ShowMax does?

I’m Antonin Kral, and I’m leading research and architecture for ShowMax . Before that, I’ve held architectural and CTO roles for the past 12 years.

ShowMax is a subscription video on demand service that launched in South Africa in 2015. We’ve got an extensive content catalogue with more than 20,000 episodes of TV shows and movies. Our service is currently available in 65 countries worldwide. While better known rivals are skirmishing in America and Europe, ShowMax is battling a more difficult problem: how do you binge-watch in a barely connected village in sub-Saharan Africa? Already 35% of video around the world is streamed, but there are still so many places the revolution has left untouched.

We are managing about 50 services running mostly on private clusters built around CoreOS. They are primarily handling API requests from our clients (Android, iOS, AppleTV, JavaScript, Samsung TV, LG TV etc), while some of them are used internally. One of the biggest internal pipelines is video encoding which can occupy 400+ physical servers when handling large ingestion batches.

The majority of our back-end services are written in Ruby, Go or Python. We use EventMachine when writing apps in Ruby (Goliath on MRI, Puma on JRuby). Go is typically used in apps that require large throughput and don’t have so much business logic. We’re very happy with Falcon for services written in Python. Data is stored in PostgreSQL and ElasticSearch clusters. We use etcd and custom tooling for configuring Varnishes for routing requests.

Interview with Life360

March 23, 2016 by Brian Brazil

This is the first in a series of interviews with users of Prometheus, allowing them to share their experiences of evaluating and using Prometheus. Our first interview is with Daniel from Life360.

Can you tell us about yourself and what Life360 does?

I’m Daniel Ben Yosef, a.k.a, dby, and I’m an Infrastructure Engineer for Life360 , and before that, I’ve held systems engineering roles for the past 9 years.

Life360 creates technology that helps families stay connected, we’re the Family Network app for families. We’re quite busy handling these families - at peak we serve 700k requests per minute for 70 million registered families.

We manage around 20 services in production, mostly handling location requests from mobile clients (Android, iOS, and Windows Phone), spanning over 150+ instances at peak. Redundancy and high-availability are our goals and we strive to maintain 100% uptime whenever possible because families trust us to be available.

We hold user data in both our MySQL multi-master cluster and in our 12-node Cassandra ring which holds around 4TB of data at any given time. We have services written in Go, Python, PHP, as well as plans to introduce Java to our stack. We use Consul for service discovery, and of course our Prometheus setup is integrated with it.

Custom Alertmanager Templates

March 3, 2016 by Fabian Reinartz

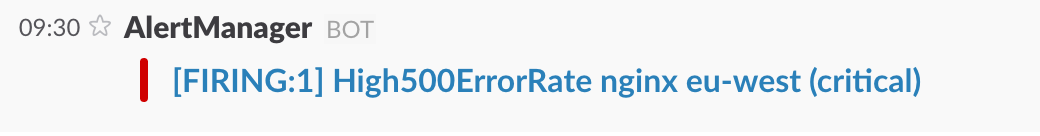

The Alertmanager handles alerts sent by Prometheus servers and sends notifications about them to different receivers based on their labels.

A receiver can be one of many different integrations such as PagerDuty, Slack, email, or a custom integration via the generic webhook interface (for example JIRA ).

Templates

The messages sent to receivers are constructed via templates. Alertmanager comes with default templates but also allows defining custom ones.

In this blog post, we will walk through a simple customization of Slack notifications.

We use this simple Alertmanager configuration that sends all alerts to Slack:

global:

slack_api_url: '<slack_webhook_url>'

route:

receiver: 'slack-notifications'

# All alerts in a notification have the same value for these labels.

group_by: [alertname, datacenter, app]

receivers:

- name: 'slack-notifications'

slack_configs:

- channel: '#alerts'By default, a Slack message sent by Alertmanager looks like this:

It shows us that there is one firing alert, followed by the label values of the alert grouping (alertname, datacenter, app) and further label values the alerts have in common (critical).

One Year of Open Prometheus Development

January 26, 2016 by Julius Volz

The beginning

A year ago today, we officially announced Prometheus to the wider world. This is a great opportunity for us to look back and share some of the wonderful things that have happened to the project since then. But first, let's start at the beginning.

Although we had already started Prometheus as an open-source project on GitHub in 2012, we didn't make noise about it at first. We wanted to give the project time to mature and be able to experiment without friction. Prometheus was gradually introduced for production monitoring at SoundCloud in 2013 and then saw more and more usage within the company, as well as some early adoption by our friends at Docker and Boxever in 2014. Over the years, Prometheus was growing more and more mature and although it was already solving people's monitoring problems, it was still unknown to the wider public.

Custom service discovery with etcd

August 17, 2015 by Fabian Reinartz

In a previous post we introduced numerous new ways of doing service discovery in Prometheus. Since then a lot has happened. We improved the internal implementation and received fantastic contributions from our community, adding support for service discovery with Kubernetes and Marathon. They will become available with the release of version 0.16.

We also touched on the topic of custom service discovery.

Not every type of service discovery is generic enough to be directly included in Prometheus. Chances are your organisation has a proprietary system in place and you just have to make it work with Prometheus. This does not mean that you cannot enjoy the benefits of automatically discovering new monitoring targets.

In this post we will implement a small utility program that connects a custom service discovery approach based on etcd , the highly consistent distributed key-value store, to Prometheus.

Monitoring DreamHack - the World's Largest Digital Festival

June 24, 2015 by Christian Svensson (DreamHack Network Team)

Editor's note: This article is a guest post written by a Prometheus user.

If you are operating the network for 10,000's of demanding gamers, you need to really know what is going on inside your network. Oh, and everything needs to be built from scratch in just five days.

If you have never heard about DreamHack before, here is the pitch: Bring 20,000 people together and have the majority of them bring their own computer. Mix in professional gaming (eSports), programming contests, and live music concerts. The result is the world's largest festival dedicated solely to everything digital.

To make such an event possible, there needs to be a lot of infrastructure in place. Ordinary infrastructures of this size take months to build, but the crew at DreamHack builds everything from scratch in just five days. This of course includes stuff like configuring network switches, but also building the electricity distribution, setting up stores for food and drinks, and even building the actual tables.

The team that builds and operates everything related to the network is officially called the Network team, but we usually refer to ourselves as tech or dhtech. This post is going to focus on the work of dhtech and how we used Prometheus during DreamHack Summer 2015 to try to kick our monitoring up another notch.

Practical Anomaly Detection

June 18, 2015 by Brian Brazil

In his Open Letter To Monitoring/Metrics/Alerting Companies , John Allspaw asserts that attempting "to detect anomalies perfectly, at the right time, is not possible".

I have seen several attempts by talented engineers to build systems to automatically detect and diagnose problems based on time series data. While it is certainly possible to get a demonstration working, the data always turned out to be too noisy to make this approach work for anything but the simplest of real-world systems.

All hope is not lost though. There are many common anomalies which you can detect and handle with custom-built rules. The Prometheus query language gives you the tools to discover these anomalies while avoiding false positives.

Advanced Service Discovery in Prometheus 0.14.0

June 1, 2015 by Fabian Reinartz, Julius Volz

This week we released Prometheus v0.14.0 — a version with many long-awaited additions and improvements.

On the user side, Prometheus now supports new service discovery mechanisms. In addition to DNS-SRV records, it now supports Consul out of the box, and a file-based interface allows you to connect your own discovery mechanisms. Over time, we plan to add other common service discovery mechanisms to Prometheus.

Aside from many smaller fixes and improvements, you can now also reload your configuration during

runtime by sending a SIGHUP to the Prometheus process. For a full list of changes, check the

changelog for this release .

In this blog post, we will take a closer look at the built-in service discovery mechanisms and provide some practical examples. As an additional resource, see Prometheus's configuration documentation.

Prometheus Monitoring Spreads through the Internet

April 24, 2015 by Brian Brazil

It has been almost three months since we publicly announced Prometheus version 0.10.0, and we're now at version 0.13.1.

SoundCloud's announcement blog post remains the best overview of the key components of Prometheus, but there has been a lot of other online activity around Prometheus. This post will let you catch up on anything you missed.

In the future, we will use this blog to publish more articles and announcements to help you get the most out of Prometheus.